To generate reports in my Qt-based software, I create a QTextDocument, which may contain some Unicode characters, convert it to HTML, and then save it to a file.

|

|

QFile file( fileName ); if ( !file.open( QIODevice::WriteOnly | QIODevice::Text ) ) return false; QTextStream out( &file ); out << mDoc.toHtml(); |

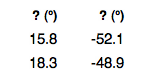

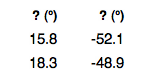

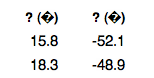

But the Unicode characters were not being being displayed properly when I opened it in a browser:

Unicode Not Displayed Properly

Taking a look at the generated HTML, I noticed that the content encoding was not being set:

|

|

<!DOCTYPE HTML PUBLIC "-//W3C//DTD HTML 4.0//EN" "http://www.w3.org/TR/REC-html40/strict.dtd"> <html><head><meta name="qrichtext" content="1" /> |

So I thought I could simply specify the encoding in the call to toHtml() which would set the encoding in the HTML header.

|

|

QFile file( fileName ); if ( !file.open( QIODevice::WriteOnly | QIODevice::Text ) ) return false; QTextStream out( &file ); out << mDoc.toHtml( "utf-8" ); |

|

|

<!DOCTYPE HTML PUBLIC "-//W3C//DTD HTML 4.0//EN" "http://www.w3.org/TR/REC-html40/strict.dtd"> <html><head><meta name="qrichtext" content="1" /><meta http-equiv="Content-Type" content="text/html; charset=utf-8" /> |

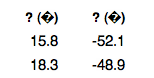

That looks better! But wait…

Unicode Still Not Displayed Properly

Uhhh… That looks worse.

After scouring the interwebs, I eventually found the answer. The QTextStream encodes based on the system locale so if you don’t set it explicitly you might not get what you expect. According to the QTextStream docs:

By default, QTextCodec::codecForLocale() is used, and automatic unicode detection is enabled.

For some reason the automatic detection did not work for my case, but the solution is to set the encoding manually using QTextStream::setCodec() like this:

|

|

QFile file( fileName ); if ( !file.open( QIODevice::WriteOnly | QIODevice::Text ) ) return false; QTextStream out( &file ); out.setCodec( "utf-8" ); out << mDoc.toHtml( "utf-8" ); |

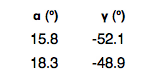

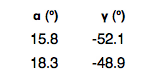

Aha! Now my Greek looked Greek!

Unicode Displayed Properly